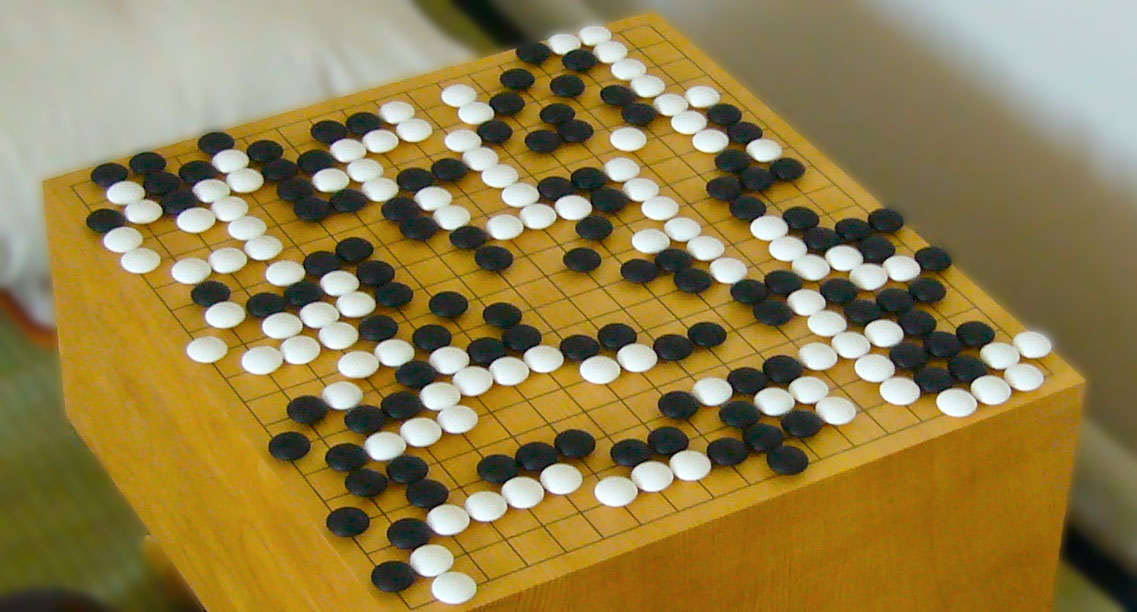

Photo credit: Wikipedia.

You might have thought that the folks at DeepMind were done after its AI, AlphaGo, beat South Korean Go world champion Lee Sedol in a five-game match. But AlphaGo apparently isn’t done yet. The team at DeepMind revealed today that it unleashed a new version of AlphaGo on the online Go community in secret.

AlphaGo, secretly playing under the usernames Magister and Master, smashed pretty much everyone it went up against. Playing top-tier opponents like Chinese 9 dan professional Nie Weiping, the AI managed an incredible 54-0 record. It went on such a tear that before yesterday’s reveal the global Go community was abuzz about who this new mystery player might be. Some even speculated that Lee Sedol himself was behind the accounts. But the “Master” account was playing so well that by the end, most observers figured it had to be AlphaGo.

The global Go community was abuzz about who this new mystery player might be.

The online games were unofficial, of course, but AlphaGo nevertheless beat some of the top Go talents in the world, and it did so with remarkable speed. Next, it will aim to repeat that feat offline. With the secret testing of its upgraded AI complete, DeepMind says it now plans to schedule more official matches – like last year’s face-off with Lee Sedol.

Korean master Lee Changho is the only Go player in the world with more international titles than Lee Sedol, and Chinese champion Gu Li has the most continental titles. If AlphaGo can beat either or both of those players, a very strong argument could be made that the best Go player on earth is no longer a human. Many already believe that AlphaGo has surpassed the Go-playing abilities of any living human.

‘Pruning’ the trees

That would be an incredible accomplishment. Traditionally, Go has been difficult for AI programs to handle because of a computer science concept called pruning.

Photo credit: blew1 / 123RF.

Basically, to decide on a move in any two-player game, a human (or AI) needs to think about their potential moves, then the opponent’s potential responses to each of those potential moves, and the potential response to those responses, and so on. Each potential move branches out with more and more possible repercussions as one looks further into the future. If you put all of those branches together and think of them as a tree, pruning is exactly what it sounds like: cutting out some of the branches so that there are fewer that must be assessed before a final decision on a move can be made.

There are more potential moves in a game of Go than there are particles in the universe.

Pruning decision trees in Go is difficult because Go has a high number of potential moves. In an average chess game, a player might have around 20 potential moves each turn, but Go players have to assess ten times as many, and each of those possible moves has ten times as many responses, and so on. Go games also tend to contain more moves in general, so the “tree” that a Go-playing AI has to “prune” and then asses before making each move is much, much bigger than the tree a chess AI has to contend with. In fact, there are more potential moves in a game of Go than there are particles in the universe. Any AI that plays Go has to learn how to evaluate potential moves and prune away the less-promising branches rather than calculating every single possible variation of every single move.

Go is also a bit more nebulous to score. When you look at a chess match, it’s usually not too difficult to determine who is winning, or which moves will be the most valuable in the long term. That, in turn, makes it relatively easy for a chess AI to choose the best moves. But Go is more difficult to pin down; it’s much harder to predict the future winner at any given moment in the game or calculate how much value a particular move will have in the endgame. That means that an AI has less to work with when it’s trying to prune out the worst potential move decisions and evaluate the rest on their merits.

AlphaGo has overcome these challenges using a surprisingly simple tactic: deep learning. Basically, the folks at DeepMind created a couple of neural network “brains” for AlphaGo, one that chooses moves and another that assesses the AI’s position in the game. The AI then played itself over and over, evaluating the relative value of various moves, and also getting feedback on strong moves from humans and historical Go matches. Over time, it gets better and better at judging which moves are best in which circumstances.

This isn’t a particularly revolutionary approach, but the DeepMind team has executed it better than any previous AI builders. AlphaGo’s 54-0 online win record is merely the latest proof of that.

This post AI sneaks online in secret and destroys everyone playing Go appeared first on Tech in Asia.

from Tech in Asia https://www.techinasia.com/alphago-ai-secretly-destroys-go-players

via IFTTT

No comments:

Post a Comment